Overview#

Interpretability is crucial for understanding and explaining how models make predictions or decisions. This section introduces techniques that offer insights into the importance of different features, the effect of individual variables, and the overall behaviour of the model. These methods enhance transparency and trust in the models used in electricity markets.

Understanding and interpreting the inner workings of machine learning models is essential for several reasons:

Transparency: clear insights into how a model makes decisions help build trust among stakeholders, including regulators, operators, and consumers.

Accountability: interpretability allows for better scrutiny and ensures that the model’s decisions can be explained and justified, which is particularly important in regulated industries like electricity markets.

Debugging and improvement: by understanding which features influence the model’s predictions, data scientists and engineers can identify and correct issues, improving the model’s accuracy and robustness.

Despite providing some transparency, these methods alone do not have causal guarantees as they merely indicate how the predictions of the models are affected by changes in the covariates. Therefore, while they can show associations and effects within the model, they do not confirm causal relationships.

Content of Interpretability Chapters#

Chapter |

Description |

|---|---|

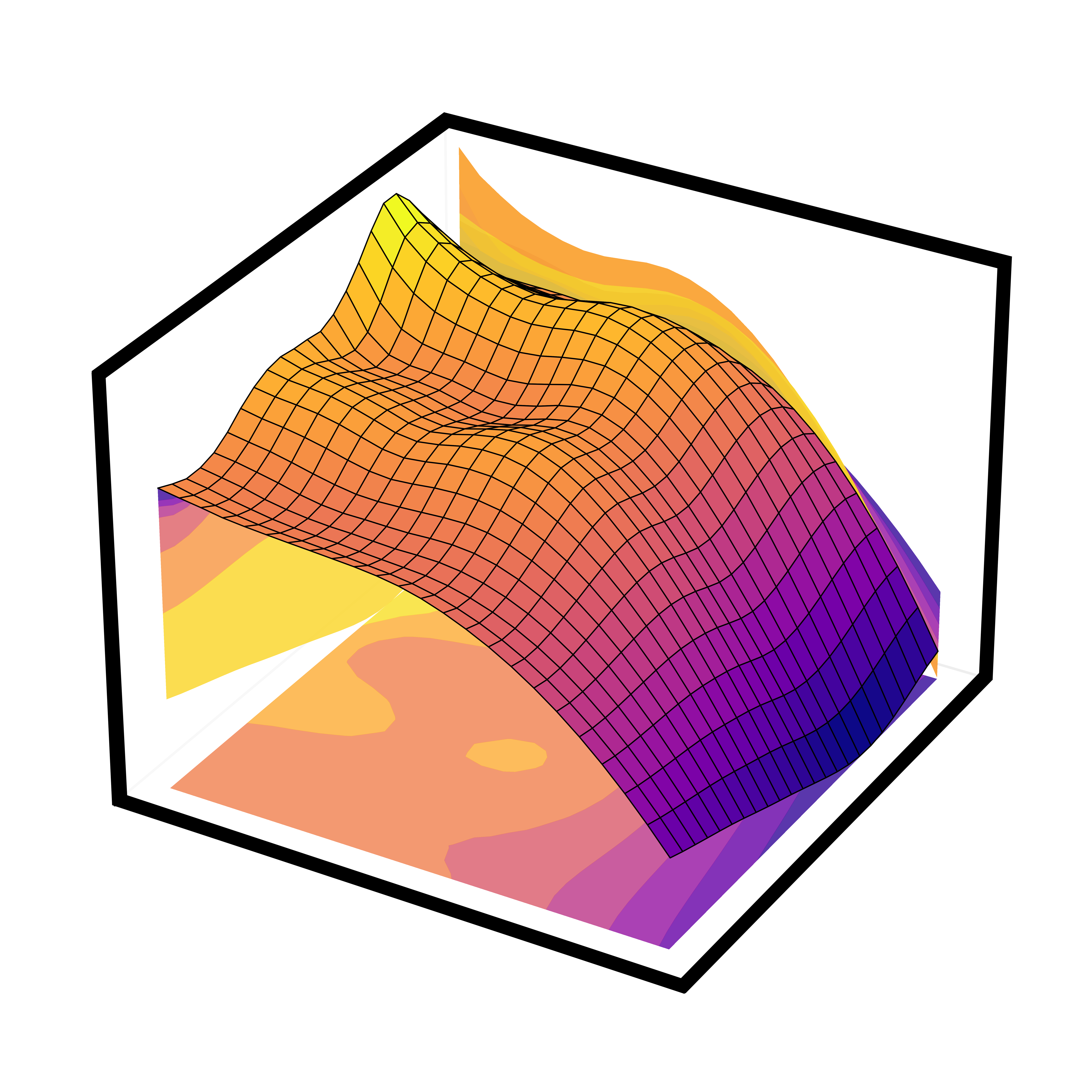

Partial Dependence Plots |

How to visualize the relationship between a feature and the predicted outcome, while averaging out the effects of all other features. |

Accumulated Local Effects |

How to provide a more accurate and unbiased alternative to PDP by accounting for feature interactions. |

Impulse Response Functions |

How to assess the dynamic impact of a change in one variable on another over time in a time series context. |

Shapley Values |

How to obtain a measure of how each feature contributes to the predictions made by the model. |